mirror of

https://github.com/acidanthera/audk.git

synced 2025-09-22 09:17:39 +02:00

IntelFsp2Pkg: Add Config Editor tool support

BZ: https://bugzilla.tianocore.org/show_bug.cgi?id=3396 This is a GUI interface that can be used by users who would like to change configuration settings directly from the interface without having to modify the source. This tool depends on Python GUI tool kit Tkinter. It runs on both Windows and Linux. The user needs to load the YAML file along with DLT file for a specific board into the ConfigEditor, change the desired configuration values. Finally, generate a new configuration delta file or a config binary blob for the newly changed values to take effect. These will be the inputs to the merge tool or the stitch tool so that new config changes can be merged and stitched into the final configuration blob. This tool also supports binary update directly and display FSP information. It is also backward compatible for BSF file format. Running Configuration Editor: python ConfigEditor.py Co-authored-by: Maurice Ma <maurice.ma@intel.com> Cc: Maurice Ma <maurice.ma@intel.com> Cc: Nate DeSimone <nathaniel.l.desimone@intel.com> Cc: Star Zeng <star.zeng@intel.com> Cc: Chasel Chiu <chasel.chiu@intel.com> Signed-off-by: Loo Tung Lun <tung.lun.loo@intel.com> Reviewed-by: Chasel Chiu <chasel.chiu@intel.com>

This commit is contained in:

parent

55dee4947b

commit

580b11201e

504

IntelFsp2Pkg/Tools/ConfigEditor/CommonUtility.py

Normal file

504

IntelFsp2Pkg/Tools/ConfigEditor/CommonUtility.py

Normal file

@ -0,0 +1,504 @@

|

||||

#!/usr/bin/env python

|

||||

# @ CommonUtility.py

|

||||

# Common utility script

|

||||

#

|

||||

# Copyright (c) 2016 - 2021, Intel Corporation. All rights reserved.<BR>

|

||||

# SPDX-License-Identifier: BSD-2-Clause-Patent

|

||||

#

|

||||

##

|

||||

|

||||

import os

|

||||

import sys

|

||||

import shutil

|

||||

import subprocess

|

||||

import string

|

||||

from ctypes import ARRAY, c_char, c_uint16, c_uint32, \

|

||||

c_uint8, Structure, sizeof

|

||||

from importlib.machinery import SourceFileLoader

|

||||

from SingleSign import single_sign_gen_pub_key

|

||||

|

||||

|

||||

# Key types defined should match with cryptolib.h

|

||||

PUB_KEY_TYPE = {

|

||||

"RSA": 1,

|

||||

"ECC": 2,

|

||||

"DSA": 3,

|

||||

}

|

||||

|

||||

# Signing type schemes defined should match with cryptolib.h

|

||||

SIGN_TYPE_SCHEME = {

|

||||

"RSA_PKCS1": 1,

|

||||

"RSA_PSS": 2,

|

||||

"ECC": 3,

|

||||

"DSA": 4,

|

||||

}

|

||||

|

||||

# Hash values defined should match with cryptolib.h

|

||||

HASH_TYPE_VALUE = {

|

||||

"SHA2_256": 1,

|

||||

"SHA2_384": 2,

|

||||

"SHA2_512": 3,

|

||||

"SM3_256": 4,

|

||||

}

|

||||

|

||||

# Hash values defined should match with cryptolib.h

|

||||

HASH_VAL_STRING = dict(map(reversed, HASH_TYPE_VALUE.items()))

|

||||

|

||||

AUTH_TYPE_HASH_VALUE = {

|

||||

"SHA2_256": 1,

|

||||

"SHA2_384": 2,

|

||||

"SHA2_512": 3,

|

||||

"SM3_256": 4,

|

||||

"RSA2048SHA256": 1,

|

||||

"RSA3072SHA384": 2,

|

||||

}

|

||||

|

||||

HASH_DIGEST_SIZE = {

|

||||

"SHA2_256": 32,

|

||||

"SHA2_384": 48,

|

||||

"SHA2_512": 64,

|

||||

"SM3_256": 32,

|

||||

}

|

||||

|

||||

|

||||

class PUB_KEY_HDR (Structure):

|

||||

_pack_ = 1

|

||||

_fields_ = [

|

||||

('Identifier', ARRAY(c_char, 4)), # signature ('P', 'U', 'B', 'K')

|

||||

('KeySize', c_uint16), # Length of Public Key

|

||||

('KeyType', c_uint8), # RSA or ECC

|

||||

('Reserved', ARRAY(c_uint8, 1)),

|

||||

('KeyData', ARRAY(c_uint8, 0)),

|

||||

]

|

||||

|

||||

def __init__(self):

|

||||

self.Identifier = b'PUBK'

|

||||

|

||||

|

||||

class SIGNATURE_HDR (Structure):

|

||||

_pack_ = 1

|

||||

_fields_ = [

|

||||

('Identifier', ARRAY(c_char, 4)),

|

||||

('SigSize', c_uint16),

|

||||

('SigType', c_uint8),

|

||||

('HashAlg', c_uint8),

|

||||

('Signature', ARRAY(c_uint8, 0)),

|

||||

]

|

||||

|

||||

def __init__(self):

|

||||

self.Identifier = b'SIGN'

|

||||

|

||||

|

||||

class LZ_HEADER(Structure):

|

||||

_pack_ = 1

|

||||

_fields_ = [

|

||||

('signature', ARRAY(c_char, 4)),

|

||||

('compressed_len', c_uint32),

|

||||

('length', c_uint32),

|

||||

('version', c_uint16),

|

||||

('svn', c_uint8),

|

||||

('attribute', c_uint8)

|

||||

]

|

||||

_compress_alg = {

|

||||

b'LZDM': 'Dummy',

|

||||

b'LZ4 ': 'Lz4',

|

||||

b'LZMA': 'Lzma',

|

||||

}

|

||||

|

||||

|

||||

def print_bytes(data, indent=0, offset=0, show_ascii=False):

|

||||

bytes_per_line = 16

|

||||

printable = ' ' + string.ascii_letters + string.digits + string.punctuation

|

||||

str_fmt = '{:s}{:04x}: {:%ds} {:s}' % (bytes_per_line * 3)

|

||||

bytes_per_line

|

||||

data_array = bytearray(data)

|

||||

for idx in range(0, len(data_array), bytes_per_line):

|

||||

hex_str = ' '.join(

|

||||

'%02X' % val for val in data_array[idx:idx + bytes_per_line])

|

||||

asc_str = ''.join('%c' % (val if (chr(val) in printable) else '.')

|

||||

for val in data_array[idx:idx + bytes_per_line])

|

||||

print(str_fmt.format(

|

||||

indent * ' ',

|

||||

offset + idx, hex_str,

|

||||

' ' + asc_str if show_ascii else ''))

|

||||

|

||||

|

||||

def get_bits_from_bytes(bytes, start, length):

|

||||

if length == 0:

|

||||

return 0

|

||||

byte_start = (start) // 8

|

||||

byte_end = (start + length - 1) // 8

|

||||

bit_start = start & 7

|

||||

mask = (1 << length) - 1

|

||||

val = bytes_to_value(bytes[byte_start:byte_end + 1])

|

||||

val = (val >> bit_start) & mask

|

||||

return val

|

||||

|

||||

|

||||

def set_bits_to_bytes(bytes, start, length, bvalue):

|

||||

if length == 0:

|

||||

return

|

||||

byte_start = (start) // 8

|

||||

byte_end = (start + length - 1) // 8

|

||||

bit_start = start & 7

|

||||

mask = (1 << length) - 1

|

||||

val = bytes_to_value(bytes[byte_start:byte_end + 1])

|

||||

val &= ~(mask << bit_start)

|

||||

val |= ((bvalue & mask) << bit_start)

|

||||

bytes[byte_start:byte_end+1] = value_to_bytearray(

|

||||

val,

|

||||

byte_end + 1 - byte_start)

|

||||

|

||||

|

||||

def value_to_bytes(value, length):

|

||||

return value.to_bytes(length, 'little')

|

||||

|

||||

|

||||

def bytes_to_value(bytes):

|

||||

return int.from_bytes(bytes, 'little')

|

||||

|

||||

|

||||

def value_to_bytearray(value, length):

|

||||

return bytearray(value_to_bytes(value, length))

|

||||

|

||||

# def value_to_bytearray (value, length):

|

||||

return bytearray(value_to_bytes(value, length))

|

||||

|

||||

|

||||

def get_aligned_value(value, alignment=4):

|

||||

if alignment != (1 << (alignment.bit_length() - 1)):

|

||||

raise Exception(

|

||||

'Alignment (0x%x) should to be power of 2 !' % alignment)

|

||||

value = (value + (alignment - 1)) & ~(alignment - 1)

|

||||

return value

|

||||

|

||||

|

||||

def get_padding_length(data_len, alignment=4):

|

||||

new_data_len = get_aligned_value(data_len, alignment)

|

||||

return new_data_len - data_len

|

||||

|

||||

|

||||

def get_file_data(file, mode='rb'):

|

||||

return open(file, mode).read()

|

||||

|

||||

|

||||

def gen_file_from_object(file, object):

|

||||

open(file, 'wb').write(object)

|

||||

|

||||

|

||||

def gen_file_with_size(file, size):

|

||||

open(file, 'wb').write(b'\xFF' * size)

|

||||

|

||||

|

||||

def check_files_exist(base_name_list, dir='', ext=''):

|

||||

for each in base_name_list:

|

||||

if not os.path.exists(os.path.join(dir, each + ext)):

|

||||

return False

|

||||

return True

|

||||

|

||||

|

||||

def load_source(name, filepath):

|

||||

mod = SourceFileLoader(name, filepath).load_module()

|

||||

return mod

|

||||

|

||||

|

||||

def get_openssl_path():

|

||||

if os.name == 'nt':

|

||||

if 'OPENSSL_PATH' not in os.environ:

|

||||

openssl_dir = "C:\\Openssl\\bin\\"

|

||||

if os.path.exists(openssl_dir):

|

||||

os.environ['OPENSSL_PATH'] = openssl_dir

|

||||

else:

|

||||

os.environ['OPENSSL_PATH'] = "C:\\Openssl\\"

|

||||

if 'OPENSSL_CONF' not in os.environ:

|

||||

openssl_cfg = "C:\\Openssl\\openssl.cfg"

|

||||

if os.path.exists(openssl_cfg):

|

||||

os.environ['OPENSSL_CONF'] = openssl_cfg

|

||||

openssl = os.path.join(

|

||||

os.environ.get('OPENSSL_PATH', ''),

|

||||

'openssl.exe')

|

||||

else:

|

||||

# Get openssl path for Linux cases

|

||||

openssl = shutil.which('openssl')

|

||||

|

||||

return openssl

|

||||

|

||||

|

||||

def run_process(arg_list, print_cmd=False, capture_out=False):

|

||||

sys.stdout.flush()

|

||||

if os.name == 'nt' and os.path.splitext(arg_list[0])[1] == '' and \

|

||||

os.path.exists(arg_list[0] + '.exe'):

|

||||

arg_list[0] += '.exe'

|

||||

if print_cmd:

|

||||

print(' '.join(arg_list))

|

||||

|

||||

exc = None

|

||||

result = 0

|

||||

output = ''

|

||||

try:

|

||||

if capture_out:

|

||||

output = subprocess.check_output(arg_list).decode()

|

||||

else:

|

||||

result = subprocess.call(arg_list)

|

||||

except Exception as ex:

|

||||

result = 1

|

||||

exc = ex

|

||||

|

||||

if result:

|

||||

if not print_cmd:

|

||||

print('Error in running process:\n %s' % ' '.join(arg_list))

|

||||

if exc is None:

|

||||

sys.exit(1)

|

||||

else:

|

||||

raise exc

|

||||

|

||||

return output

|

||||

|

||||

|

||||

# Adjust hash type algorithm based on Public key file

|

||||

def adjust_hash_type(pub_key_file):

|

||||

key_type = get_key_type(pub_key_file)

|

||||

if key_type == 'RSA2048':

|

||||

hash_type = 'SHA2_256'

|

||||

elif key_type == 'RSA3072':

|

||||

hash_type = 'SHA2_384'

|

||||

else:

|

||||

hash_type = None

|

||||

|

||||

return hash_type

|

||||

|

||||

|

||||

def rsa_sign_file(

|

||||

priv_key, pub_key, hash_type, sign_scheme,

|

||||

in_file, out_file, inc_dat=False, inc_key=False):

|

||||

|

||||

bins = bytearray()

|

||||

if inc_dat:

|

||||

bins.extend(get_file_data(in_file))

|

||||

|

||||

|

||||

# def single_sign_file(priv_key, hash_type, sign_scheme, in_file, out_file):

|

||||

|

||||

out_data = get_file_data(out_file)

|

||||

|

||||

sign = SIGNATURE_HDR()

|

||||

sign.SigSize = len(out_data)

|

||||

sign.SigType = SIGN_TYPE_SCHEME[sign_scheme]

|

||||

sign.HashAlg = HASH_TYPE_VALUE[hash_type]

|

||||

|

||||

bins.extend(bytearray(sign) + out_data)

|

||||

if inc_key:

|

||||

key = gen_pub_key(priv_key, pub_key)

|

||||

bins.extend(key)

|

||||

|

||||

if len(bins) != len(out_data):

|

||||

gen_file_from_object(out_file, bins)

|

||||

|

||||

|

||||

def get_key_type(in_key):

|

||||

|

||||

# Check in_key is file or key Id

|

||||

if not os.path.exists(in_key):

|

||||

key = bytearray(gen_pub_key(in_key))

|

||||

else:

|

||||

# Check for public key in binary format.

|

||||

key = bytearray(get_file_data(in_key))

|

||||

|

||||

pub_key_hdr = PUB_KEY_HDR.from_buffer(key)

|

||||

if pub_key_hdr.Identifier != b'PUBK':

|

||||

pub_key = gen_pub_key(in_key)

|

||||

pub_key_hdr = PUB_KEY_HDR.from_buffer(pub_key)

|

||||

|

||||

key_type = next(

|

||||

(key for key,

|

||||

value in PUB_KEY_TYPE.items() if value == pub_key_hdr.KeyType))

|

||||

return '%s%d' % (key_type, (pub_key_hdr.KeySize - 4) * 8)

|

||||

|

||||

|

||||

def get_auth_hash_type(key_type, sign_scheme):

|

||||

if key_type == "RSA2048" and sign_scheme == "RSA_PKCS1":

|

||||

hash_type = 'SHA2_256'

|

||||

auth_type = 'RSA2048_PKCS1_SHA2_256'

|

||||

elif key_type == "RSA3072" and sign_scheme == "RSA_PKCS1":

|

||||

hash_type = 'SHA2_384'

|

||||

auth_type = 'RSA3072_PKCS1_SHA2_384'

|

||||

elif key_type == "RSA2048" and sign_scheme == "RSA_PSS":

|

||||

hash_type = 'SHA2_256'

|

||||

auth_type = 'RSA2048_PSS_SHA2_256'

|

||||

elif key_type == "RSA3072" and sign_scheme == "RSA_PSS":

|

||||

hash_type = 'SHA2_384'

|

||||

auth_type = 'RSA3072_PSS_SHA2_384'

|

||||

else:

|

||||

hash_type = ''

|

||||

auth_type = ''

|

||||

return auth_type, hash_type

|

||||

|

||||

|

||||

# def single_sign_gen_pub_key(in_key, pub_key_file=None):

|

||||

|

||||

|

||||

def gen_pub_key(in_key, pub_key=None):

|

||||

|

||||

keydata = single_sign_gen_pub_key(in_key, pub_key)

|

||||

|

||||

publickey = PUB_KEY_HDR()

|

||||

publickey.KeySize = len(keydata)

|

||||

publickey.KeyType = PUB_KEY_TYPE['RSA']

|

||||

|

||||

key = bytearray(publickey) + keydata

|

||||

|

||||

if pub_key:

|

||||

gen_file_from_object(pub_key, key)

|

||||

|

||||

return key

|

||||

|

||||

|

||||

def decompress(in_file, out_file, tool_dir=''):

|

||||

if not os.path.isfile(in_file):

|

||||

raise Exception("Invalid input file '%s' !" % in_file)

|

||||

|

||||

# Remove the Lz Header

|

||||

fi = open(in_file, 'rb')

|

||||

di = bytearray(fi.read())

|

||||

fi.close()

|

||||

|

||||

lz_hdr = LZ_HEADER.from_buffer(di)

|

||||

offset = sizeof(lz_hdr)

|

||||

if lz_hdr.signature == b"LZDM" or lz_hdr.compressed_len == 0:

|

||||

fo = open(out_file, 'wb')

|

||||

fo.write(di[offset:offset + lz_hdr.compressed_len])

|

||||

fo.close()

|

||||

return

|

||||

|

||||

temp = os.path.splitext(out_file)[0] + '.tmp'

|

||||

if lz_hdr.signature == b"LZMA":

|

||||

alg = "Lzma"

|

||||

elif lz_hdr.signature == b"LZ4 ":

|

||||

alg = "Lz4"

|

||||

else:

|

||||

raise Exception("Unsupported compression '%s' !" % lz_hdr.signature)

|

||||

|

||||

fo = open(temp, 'wb')

|

||||

fo.write(di[offset:offset + lz_hdr.compressed_len])

|

||||

fo.close()

|

||||

|

||||

compress_tool = "%sCompress" % alg

|

||||

if alg == "Lz4":

|

||||

try:

|

||||

cmdline = [

|

||||

os.path.join(tool_dir, compress_tool),

|

||||

"-d",

|

||||

"-o", out_file,

|

||||

temp]

|

||||

run_process(cmdline, False, True)

|

||||

except Exception:

|

||||

msg_string = "Could not find/use CompressLz4 tool, " \

|

||||

"trying with python lz4..."

|

||||

print(msg_string)

|

||||

try:

|

||||

import lz4.block

|

||||

if lz4.VERSION != '3.1.1':

|

||||

msg_string = "Recommended lz4 module version " \

|

||||

"is '3.1.1'," + lz4.VERSION \

|

||||

+ " is currently installed."

|

||||

print(msg_string)

|

||||

except ImportError:

|

||||

msg_string = "Could not import lz4, use " \

|

||||

"'python -m pip install lz4==3.1.1' " \

|

||||

"to install it."

|

||||

print(msg_string)

|

||||

exit(1)

|

||||

decompress_data = lz4.block.decompress(get_file_data(temp))

|

||||

with open(out_file, "wb") as lz4bin:

|

||||

lz4bin.write(decompress_data)

|

||||

else:

|

||||

cmdline = [

|

||||

os.path.join(tool_dir, compress_tool),

|

||||

"-d",

|

||||

"-o", out_file,

|

||||

temp]

|

||||

run_process(cmdline, False, True)

|

||||

os.remove(temp)

|

||||

|

||||

|

||||

def compress(in_file, alg, svn=0, out_path='', tool_dir=''):

|

||||

if not os.path.isfile(in_file):

|

||||

raise Exception("Invalid input file '%s' !" % in_file)

|

||||

|

||||

basename, ext = os.path.splitext(os.path.basename(in_file))

|

||||

if out_path:

|

||||

if os.path.isdir(out_path):

|

||||

out_file = os.path.join(out_path, basename + '.lz')

|

||||

else:

|

||||

out_file = os.path.join(out_path)

|

||||

else:

|

||||

out_file = os.path.splitext(in_file)[0] + '.lz'

|

||||

|

||||

if alg == "Lzma":

|

||||

sig = "LZMA"

|

||||

elif alg == "Tiano":

|

||||

sig = "LZUF"

|

||||

elif alg == "Lz4":

|

||||

sig = "LZ4 "

|

||||

elif alg == "Dummy":

|

||||

sig = "LZDM"

|

||||

else:

|

||||

raise Exception("Unsupported compression '%s' !" % alg)

|

||||

|

||||

in_len = os.path.getsize(in_file)

|

||||

if in_len > 0:

|

||||

compress_tool = "%sCompress" % alg

|

||||

if sig == "LZDM":

|

||||

shutil.copy(in_file, out_file)

|

||||

compress_data = get_file_data(out_file)

|

||||

elif sig == "LZ4 ":

|

||||

try:

|

||||

cmdline = [

|

||||

os.path.join(tool_dir, compress_tool),

|

||||

"-e",

|

||||

"-o", out_file,

|

||||

in_file]

|

||||

run_process(cmdline, False, True)

|

||||

compress_data = get_file_data(out_file)

|

||||

except Exception:

|

||||

msg_string = "Could not find/use CompressLz4 tool, " \

|

||||

"trying with python lz4..."

|

||||

print(msg_string)

|

||||

try:

|

||||

import lz4.block

|

||||

if lz4.VERSION != '3.1.1':

|

||||

msg_string = "Recommended lz4 module version " \

|

||||

"is '3.1.1', " + lz4.VERSION \

|

||||

+ " is currently installed."

|

||||

print(msg_string)

|

||||

except ImportError:

|

||||

msg_string = "Could not import lz4, use " \

|

||||

"'python -m pip install lz4==3.1.1' " \

|

||||

"to install it."

|

||||

print(msg_string)

|

||||

exit(1)

|

||||

compress_data = lz4.block.compress(

|

||||

get_file_data(in_file),

|

||||

mode='high_compression')

|

||||

elif sig == "LZMA":

|

||||

cmdline = [

|

||||

os.path.join(tool_dir, compress_tool),

|

||||

"-e",

|

||||

"-o", out_file,

|

||||

in_file]

|

||||

run_process(cmdline, False, True)

|

||||

compress_data = get_file_data(out_file)

|

||||

else:

|

||||

compress_data = bytearray()

|

||||

|

||||

lz_hdr = LZ_HEADER()

|

||||

lz_hdr.signature = sig.encode()

|

||||

lz_hdr.svn = svn

|

||||

lz_hdr.compressed_len = len(compress_data)

|

||||

lz_hdr.length = os.path.getsize(in_file)

|

||||

data = bytearray()

|

||||

data.extend(lz_hdr)

|

||||

data.extend(compress_data)

|

||||

gen_file_from_object(out_file, data)

|

||||

|

||||

return out_file

|

||||

1499

IntelFsp2Pkg/Tools/ConfigEditor/ConfigEditor.py

Normal file

1499

IntelFsp2Pkg/Tools/ConfigEditor/ConfigEditor.py

Normal file

File diff suppressed because it is too large

Load Diff

2252

IntelFsp2Pkg/Tools/ConfigEditor/GenYamlCfg.py

Normal file

2252

IntelFsp2Pkg/Tools/ConfigEditor/GenYamlCfg.py

Normal file

File diff suppressed because it is too large

Load Diff

324

IntelFsp2Pkg/Tools/ConfigEditor/SingleSign.py

Normal file

324

IntelFsp2Pkg/Tools/ConfigEditor/SingleSign.py

Normal file

@ -0,0 +1,324 @@

|

||||

#!/usr/bin/env python

|

||||

# @ SingleSign.py

|

||||

# Single signing script

|

||||

#

|

||||

# Copyright (c) 2020 - 2021, Intel Corporation. All rights reserved.<BR>

|

||||

# SPDX-License-Identifier: BSD-2-Clause-Patent

|

||||

#

|

||||

##

|

||||

|

||||

import os

|

||||

import sys

|

||||

import re

|

||||

import shutil

|

||||

import subprocess

|

||||

|

||||

SIGNING_KEY = {

|

||||

# Key Id | Key File Name start |

|

||||

# =================================================================

|

||||

# KEY_ID_MASTER is used for signing Slimboot Key Hash Manifest \

|

||||

# container (KEYH Component)

|

||||

"KEY_ID_MASTER_RSA2048": "MasterTestKey_Priv_RSA2048.pem",

|

||||

"KEY_ID_MASTER_RSA3072": "MasterTestKey_Priv_RSA3072.pem",

|

||||

|

||||

# KEY_ID_CFGDATA is used for signing external Config data blob)

|

||||

"KEY_ID_CFGDATA_RSA2048": "ConfigTestKey_Priv_RSA2048.pem",

|

||||

"KEY_ID_CFGDATA_RSA3072": "ConfigTestKey_Priv_RSA3072.pem",

|

||||

|

||||

# KEY_ID_FIRMWAREUPDATE is used for signing capsule firmware update image)

|

||||

"KEY_ID_FIRMWAREUPDATE_RSA2048": "FirmwareUpdateTestKey_Priv_RSA2048.pem",

|

||||

"KEY_ID_FIRMWAREUPDATE_RSA3072": "FirmwareUpdateTestKey_Priv_RSA3072.pem",

|

||||

|

||||

# KEY_ID_CONTAINER is used for signing container header with mono signature

|

||||

"KEY_ID_CONTAINER_RSA2048": "ContainerTestKey_Priv_RSA2048.pem",

|

||||

"KEY_ID_CONTAINER_RSA3072": "ContainerTestKey_Priv_RSA3072.pem",

|

||||

|

||||

# CONTAINER_COMP1_KEY_ID is used for signing container components

|

||||

"KEY_ID_CONTAINER_COMP_RSA2048": "ContainerCompTestKey_Priv_RSA2048.pem",

|

||||

"KEY_ID_CONTAINER_COMP_RSA3072": "ContainerCompTestKey_Priv_RSA3072.pem",

|

||||

|

||||

# KEY_ID_OS1_PUBLIC, KEY_ID_OS2_PUBLIC is used for referencing \

|

||||

# Boot OS public keys

|

||||

"KEY_ID_OS1_PUBLIC_RSA2048": "OS1_TestKey_Pub_RSA2048.pem",

|

||||

"KEY_ID_OS1_PUBLIC_RSA3072": "OS1_TestKey_Pub_RSA3072.pem",

|

||||

|

||||

"KEY_ID_OS2_PUBLIC_RSA2048": "OS2_TestKey_Pub_RSA2048.pem",

|

||||

"KEY_ID_OS2_PUBLIC_RSA3072": "OS2_TestKey_Pub_RSA3072.pem",

|

||||

|

||||

}

|

||||

|

||||

MESSAGE_SBL_KEY_DIR = """!!! PRE-REQUISITE: Path to SBL_KEY_DIR has.

|

||||

to be set with SBL KEYS DIRECTORY !!! \n!!! Generate keys.

|

||||

using GenerateKeys.py available in BootloaderCorePkg/Tools.

|

||||

directory !!! \n !!! Run $python.

|

||||

BootloaderCorePkg/Tools/GenerateKeys.py -k $PATH_TO_SBL_KEY_DIR !!!\n

|

||||

!!! Set SBL_KEY_DIR environ with path to SBL KEYS DIR !!!\n"

|

||||

!!! Windows $set SBL_KEY_DIR=$PATH_TO_SBL_KEY_DIR !!!\n

|

||||

!!! Linux $export SBL_KEY_DIR=$PATH_TO_SBL_KEY_DIR !!!\n"""

|

||||

|

||||

|

||||

def get_openssl_path():

|

||||

if os.name == 'nt':

|

||||

if 'OPENSSL_PATH' not in os.environ:

|

||||

openssl_dir = "C:\\Openssl\\bin\\"

|

||||

if os.path.exists(openssl_dir):

|

||||

os.environ['OPENSSL_PATH'] = openssl_dir

|

||||

else:

|

||||

os.environ['OPENSSL_PATH'] = "C:\\Openssl\\"

|

||||

if 'OPENSSL_CONF' not in os.environ:

|

||||

openssl_cfg = "C:\\Openssl\\openssl.cfg"

|

||||

if os.path.exists(openssl_cfg):

|

||||

os.environ['OPENSSL_CONF'] = openssl_cfg

|

||||

openssl = os.path.join(

|

||||

os.environ.get('OPENSSL_PATH', ''),

|

||||

'openssl.exe')

|

||||

else:

|

||||

# Get openssl path for Linux cases

|

||||

openssl = shutil.which('openssl')

|

||||

|

||||

return openssl

|

||||

|

||||

|

||||

def run_process(arg_list, print_cmd=False, capture_out=False):

|

||||

sys.stdout.flush()

|

||||

if print_cmd:

|

||||

print(' '.join(arg_list))

|

||||

|

||||

exc = None

|

||||

result = 0

|

||||

output = ''

|

||||

try:

|

||||

if capture_out:

|

||||

output = subprocess.check_output(arg_list).decode()

|

||||

else:

|

||||

result = subprocess.call(arg_list)

|

||||

except Exception as ex:

|

||||

result = 1

|

||||

exc = ex

|

||||

|

||||

if result:

|

||||

if not print_cmd:

|

||||

print('Error in running process:\n %s' % ' '.join(arg_list))

|

||||

if exc is None:

|

||||

sys.exit(1)

|

||||

else:

|

||||

raise exc

|

||||

|

||||

return output

|

||||

|

||||

|

||||

def check_file_pem_format(priv_key):

|

||||

# Check for file .pem format

|

||||

key_name = os.path.basename(priv_key)

|

||||

if os.path.splitext(key_name)[1] == ".pem":

|

||||

return True

|

||||

else:

|

||||

return False

|

||||

|

||||

|

||||

def get_key_id(priv_key):

|

||||

# Extract base name if path is provided.

|

||||

key_name = os.path.basename(priv_key)

|

||||

# Check for KEY_ID in key naming.

|

||||

if key_name.startswith('KEY_ID'):

|

||||

return key_name

|

||||

else:

|

||||

return None

|

||||

|

||||

|

||||

def get_sbl_key_dir():

|

||||

# Check Key store setting SBL_KEY_DIR path

|

||||

if 'SBL_KEY_DIR' not in os.environ:

|

||||

exception_string = "ERROR: SBL_KEY_DIR is not defined." \

|

||||

" Set SBL_KEY_DIR with SBL Keys directory!!\n"

|

||||

raise Exception(exception_string + MESSAGE_SBL_KEY_DIR)

|

||||

|

||||

sbl_key_dir = os.environ.get('SBL_KEY_DIR')

|

||||

if not os.path.exists(sbl_key_dir):

|

||||

exception_string = "ERROR:SBL_KEY_DIR set " + sbl_key_dir \

|

||||

+ " is not valid." \

|

||||

" Set the correct SBL_KEY_DIR path !!\n" \

|

||||

+ MESSAGE_SBL_KEY_DIR

|

||||

raise Exception(exception_string)

|

||||

else:

|

||||

return sbl_key_dir

|

||||

|

||||

|

||||

def get_key_from_store(in_key):

|

||||

|

||||

# Check in_key is path to key

|

||||

if os.path.exists(in_key):

|

||||

return in_key

|

||||

|

||||

# Get Slimboot key dir path

|

||||

sbl_key_dir = get_sbl_key_dir()

|

||||

|

||||

# Extract if in_key is key_id

|

||||

priv_key = get_key_id(in_key)

|

||||

if priv_key is not None:

|

||||

if (priv_key in SIGNING_KEY):

|

||||

# Generate key file name from key id

|

||||

priv_key_file = SIGNING_KEY[priv_key]

|

||||

else:

|

||||

exception_string = "KEY_ID" + priv_key + "is not found " \

|

||||

"is not found in supported KEY IDs!!"

|

||||

raise Exception(exception_string)

|

||||

elif check_file_pem_format(in_key):

|

||||

# check if file name is provided in pem format

|

||||

priv_key_file = in_key

|

||||

else:

|

||||

priv_key_file = None

|

||||

raise Exception('key provided %s is not valid!' % in_key)

|

||||

|

||||

# Create a file path

|

||||

# Join Key Dir and priv_key_file

|

||||

try:

|

||||

priv_key = os.path.join(sbl_key_dir, priv_key_file)

|

||||

except Exception:

|

||||

raise Exception('priv_key is not found %s!' % priv_key)

|

||||

|

||||

# Check for priv_key construted based on KEY ID exists in specified path

|

||||

if not os.path.isfile(priv_key):

|

||||

exception_string = "!!! ERROR: Key file corresponding to" \

|

||||

+ in_key + "do not exist in Sbl key " \

|

||||

"directory at" + sbl_key_dir + "!!! \n" \

|

||||

+ MESSAGE_SBL_KEY_DIR

|

||||

raise Exception(exception_string)

|

||||

|

||||

return priv_key

|

||||

|

||||

#

|

||||

# Sign an file using openssl

|

||||

#

|

||||

# priv_key [Input] Key Id or Path to Private key

|

||||

# hash_type [Input] Signing hash

|

||||

# sign_scheme[Input] Sign/padding scheme

|

||||

# in_file [Input] Input file to be signed

|

||||

# out_file [Input/Output] Signed data file

|

||||

#

|

||||

|

||||

|

||||

def single_sign_file(priv_key, hash_type, sign_scheme, in_file, out_file):

|

||||

|

||||

_hash_type_string = {

|

||||

"SHA2_256": 'sha256',

|

||||

"SHA2_384": 'sha384',

|

||||

"SHA2_512": 'sha512',

|

||||

}

|

||||

|

||||

_hash_digest_Size = {

|

||||

# Hash_string : Hash_Size

|

||||

"SHA2_256": 32,

|

||||

"SHA2_384": 48,

|

||||

"SHA2_512": 64,

|

||||

"SM3_256": 32,

|

||||

}

|

||||

|

||||

_sign_scheme_string = {

|

||||

"RSA_PKCS1": 'pkcs1',

|

||||

"RSA_PSS": 'pss',

|

||||

}

|

||||

|

||||

priv_key = get_key_from_store(priv_key)

|

||||

|

||||

# Temporary files to store hash generated

|

||||

hash_file_tmp = out_file+'.hash.tmp'

|

||||

hash_file = out_file+'.hash'

|

||||

|

||||

# Generate hash using openssl dgst in hex format

|

||||

cmdargs = [get_openssl_path(),

|

||||

'dgst',

|

||||

'-'+'%s' % _hash_type_string[hash_type],

|

||||

'-out', '%s' % hash_file_tmp, '%s' % in_file]

|

||||

run_process(cmdargs)

|

||||

|

||||

# Extract hash form dgst command output and convert to ascii

|

||||

with open(hash_file_tmp, 'r') as fin:

|

||||

hashdata = fin.read()

|

||||

fin.close()

|

||||

|

||||

try:

|

||||

hashdata = hashdata.rsplit('=', 1)[1].strip()

|

||||

except Exception:

|

||||

raise Exception('Hash Data not found for signing!')

|

||||

|

||||

if len(hashdata) != (_hash_digest_Size[hash_type] * 2):

|

||||

raise Exception('Hash Data size do match with for hash type!')

|

||||

|

||||

hashdata_bytes = bytearray.fromhex(hashdata)

|

||||

open(hash_file, 'wb').write(hashdata_bytes)

|

||||

|

||||

print("Key used for Singing %s !!" % priv_key)

|

||||

|

||||

# sign using Openssl pkeyutl

|

||||

cmdargs = [get_openssl_path(),

|

||||

'pkeyutl', '-sign', '-in', '%s' % hash_file,

|

||||

'-inkey', '%s' % priv_key, '-out',

|

||||

'%s' % out_file, '-pkeyopt',

|

||||

'digest:%s' % _hash_type_string[hash_type],

|

||||

'-pkeyopt', 'rsa_padding_mode:%s' %

|

||||

_sign_scheme_string[sign_scheme]]

|

||||

|

||||

run_process(cmdargs)

|

||||

|

||||

return

|

||||

|

||||

#

|

||||

# Extract public key using openssl

|

||||

#

|

||||

# in_key [Input] Private key or public key in pem format

|

||||

# pub_key_file [Input/Output] Public Key to a file

|

||||

#

|

||||

# return keydata (mod, exp) in bin format

|

||||

#

|

||||

|

||||

|

||||

def single_sign_gen_pub_key(in_key, pub_key_file=None):

|

||||

|

||||

in_key = get_key_from_store(in_key)

|

||||

|

||||

# Expect key to be in PEM format

|

||||

is_prv_key = False

|

||||

cmdline = [get_openssl_path(), 'rsa', '-pubout', '-text', '-noout',

|

||||

'-in', '%s' % in_key]

|

||||

# Check if it is public key or private key

|

||||

text = open(in_key, 'r').read()

|

||||

if '-BEGIN RSA PRIVATE KEY-' in text:

|

||||

is_prv_key = True

|

||||

elif '-BEGIN PUBLIC KEY-' in text:

|

||||

cmdline.extend(['-pubin'])

|

||||

else:

|

||||

raise Exception('Unknown key format "%s" !' % in_key)

|

||||

|

||||

if pub_key_file:

|

||||

cmdline.extend(['-out', '%s' % pub_key_file])

|

||||

capture = False

|

||||

else:

|

||||

capture = True

|

||||

|

||||

output = run_process(cmdline, capture_out=capture)

|

||||

if not capture:

|

||||

output = text = open(pub_key_file, 'r').read()

|

||||

data = output.replace('\r', '')

|

||||

data = data.replace('\n', '')

|

||||

data = data.replace(' ', '')

|

||||

|

||||

# Extract the modulus

|

||||

if is_prv_key:

|

||||

match = re.search('modulus(.*)publicExponent:\\s+(\\d+)\\s+', data)

|

||||

else:

|

||||

match = re.search('Modulus(?:.*?):(.*)Exponent:\\s+(\\d+)\\s+', data)

|

||||

if not match:

|

||||

raise Exception('Public key not found!')

|

||||

modulus = match.group(1).replace(':', '')

|

||||

exponent = int(match.group(2))

|

||||

|

||||

mod = bytearray.fromhex(modulus)

|

||||

# Remove the '00' from the front if the MSB is 1

|

||||

if mod[0] == 0 and (mod[1] & 0x80):

|

||||

mod = mod[1:]

|

||||

exp = bytearray.fromhex('{:08x}'.format(exponent))

|

||||

|

||||

keydata = mod + exp

|

||||

|

||||

return keydata

|

||||

@ -10,277 +10,38 @@

|

||||

import os

|

||||

import re

|

||||

import sys

|

||||

from datetime import date

|

||||

from collections import OrderedDict

|

||||

from functools import reduce

|

||||

|

||||

from GenCfgOpt import CGenCfgOpt

|

||||

from collections import OrderedDict

|

||||

from datetime import date

|

||||

|

||||

from FspGenCfgData import CFspBsf2Dsc, CGenCfgData

|

||||

|

||||

__copyright_tmp__ = """## @file

|

||||

#

|

||||

# YAML CFGDATA %s File.

|

||||

# Slim Bootloader CFGDATA %s File.

|

||||

#

|

||||

# Copyright(c) %4d, Intel Corporation. All rights reserved.<BR>

|

||||

# Copyright (c) %4d, Intel Corporation. All rights reserved.<BR>

|

||||

# SPDX-License-Identifier: BSD-2-Clause-Patent

|

||||

#

|

||||

##

|

||||

"""

|

||||

|

||||

__copyright_dsc__ = """## @file

|

||||

#

|

||||

# Copyright (c) %04d, Intel Corporation. All rights reserved.<BR>

|

||||

# SPDX-License-Identifier: BSD-2-Clause-Patent

|

||||

#

|

||||

##

|

||||

|

||||

[PcdsDynamicVpd.Upd]

|

||||

#

|

||||

# Global definitions in BSF

|

||||

# !BSF BLOCK:{NAME:"FSP UPD Configuration", VER:"0.1"}

|

||||

#

|

||||

|

||||

"""

|

||||

|

||||

|

||||

def Bytes2Val(Bytes):

|

||||

return reduce(lambda x, y: (x << 8) | y, Bytes[::-1])

|

||||

|

||||

|

||||

def Str2Bytes(Value, Blen):

|

||||

Result = bytearray(Value[1:-1], 'utf-8') # Excluding quotes

|

||||

if len(Result) < Blen:

|

||||

Result.extend(b'\x00' * (Blen - len(Result)))

|

||||

return Result

|

||||

|

||||

|

||||

class CFspBsf2Dsc:

|

||||

|

||||

def __init__(self, bsf_file):

|

||||

self.cfg_list = CFspBsf2Dsc.parse_bsf(bsf_file)

|

||||

|

||||

def get_dsc_lines(self):

|

||||

return CFspBsf2Dsc.generate_dsc(self.cfg_list)

|

||||

|

||||

def save_dsc(self, dsc_file):

|

||||

return CFspBsf2Dsc.generate_dsc(self.cfg_list, dsc_file)

|

||||

|

||||

@staticmethod

|

||||

def parse_bsf(bsf_file):

|

||||

|

||||

fd = open(bsf_file, 'r')

|

||||

bsf_txt = fd.read()

|

||||

fd.close()

|

||||

|

||||

find_list = []

|

||||

regex = re.compile(r'\s+Find\s+"(.*?)"(.*?)^\s+\$(.*?)\s+', re.S | re.MULTILINE)

|

||||

for match in regex.finditer(bsf_txt):

|

||||

find = match.group(1)

|

||||

name = match.group(3)

|

||||

if not name.endswith('_Revision'):

|

||||

raise Exception("Unexpected CFG item following 'Find' !")

|

||||

find_list.append((name, find))

|

||||

|

||||

idx = 0

|

||||

count = 0

|

||||

prefix = ''

|

||||

chk_dict = {}

|

||||

cfg_list = []

|

||||

cfg_temp = {'find': '', 'cname': '', 'length': 0, 'value': '0', 'type': 'Reserved',

|

||||

'embed': '', 'page': '', 'option': '', 'instance': 0}

|

||||

regex = re.compile(r'^\s+(\$(.*?)|Skip)\s+(\d+)\s+bytes(\s+\$_DEFAULT_\s+=\s+(.+?))?$',

|

||||

re.S | re.MULTILINE)

|

||||

|

||||

for match in regex.finditer(bsf_txt):

|

||||

dlen = int(match.group(3))

|

||||

if match.group(1) == 'Skip':

|

||||

key = 'gPlatformFspPkgTokenSpaceGuid_BsfSkip%d' % idx

|

||||

val = ', '.join(['%02X' % ord(i) for i in '\x00' * dlen])

|

||||

idx += 1

|

||||

option = '$SKIP'

|

||||

else:

|

||||

key = match.group(2)

|

||||

val = match.group(5)

|

||||

option = ''

|

||||

|

||||

cfg_item = dict(cfg_temp)

|

||||

finds = [i for i in find_list if i[0] == key]

|

||||

if len(finds) > 0:

|

||||

if count >= 1:

|

||||

# Append a dummy one

|

||||

cfg_item['cname'] = 'Dummy'

|

||||

cfg_list.append(dict(cfg_item))

|

||||

cfg_list[-1]['embed'] = '%s:TAG_%03X:END' % (prefix, ord(prefix[-1]))

|

||||

prefix = finds[0][1]

|

||||

cfg_item['embed'] = '%s:TAG_%03X:START' % (prefix, ord(prefix[-1]))

|

||||

cfg_item['find'] = prefix

|

||||

cfg_item['cname'] = 'Signature'

|

||||

cfg_item['length'] = len(finds[0][1])

|

||||

str2byte = Str2Bytes("'" + finds[0][1] + "'", len(finds[0][1]))

|

||||

cfg_item['value'] = '0x%X' % Bytes2Val(str2byte)

|

||||

cfg_list.append(dict(cfg_item))

|

||||

cfg_item = dict(cfg_temp)

|

||||

find_list.pop(0)

|

||||

count = 0

|

||||

|

||||

cfg_item['cname'] = key

|

||||

cfg_item['length'] = dlen

|

||||

cfg_item['value'] = val

|

||||

cfg_item['option'] = option

|

||||

|

||||

if key not in chk_dict.keys():

|

||||

chk_dict[key] = 0

|

||||

else:

|

||||

chk_dict[key] += 1

|

||||

cfg_item['instance'] = chk_dict[key]

|

||||

|

||||

cfg_list.append(cfg_item)

|

||||

count += 1

|

||||

|

||||

if prefix:

|

||||

cfg_item = dict(cfg_temp)

|

||||

cfg_item['cname'] = 'Dummy'

|

||||

cfg_item['embed'] = '%s:%03X:END' % (prefix, ord(prefix[-1]))

|

||||

cfg_list.append(cfg_item)

|

||||

|

||||

option_dict = {}

|

||||

selreg = re.compile(r'\s+Selection\s*(.+?)\s*,\s*"(.*?)"$', re.S | re.MULTILINE)

|

||||

regex = re.compile(r'^List\s&(.+?)$(.+?)^EndList$', re.S | re.MULTILINE)

|

||||

for match in regex.finditer(bsf_txt):

|

||||

key = match.group(1)

|

||||

option_dict[key] = []

|

||||

for select in selreg.finditer(match.group(2)):

|

||||

option_dict[key].append((int(select.group(1), 0), select.group(2)))

|

||||

|

||||

chk_dict = {}

|

||||

pagereg = re.compile(r'^Page\s"(.*?)"$(.+?)^EndPage$', re.S | re.MULTILINE)

|

||||

for match in pagereg.finditer(bsf_txt):

|

||||

page = match.group(1)

|

||||

for line in match.group(2).splitlines():

|

||||

match = re.match(r'\s+(Combo|EditNum)\s\$(.+?),\s"(.*?)",\s(.+?),$', line)

|

||||

if match:

|

||||

cname = match.group(2)

|

||||

if cname not in chk_dict.keys():

|

||||

chk_dict[cname] = 0

|

||||

else:

|

||||

chk_dict[cname] += 1

|

||||

instance = chk_dict[cname]

|

||||

cfg_idxs = [i for i, j in enumerate(cfg_list) if j['cname'] == cname and j['instance'] == instance]

|

||||

if len(cfg_idxs) != 1:

|

||||

raise Exception("Multiple CFG item '%s' found !" % cname)

|

||||

cfg_item = cfg_list[cfg_idxs[0]]

|

||||

cfg_item['page'] = page

|

||||

cfg_item['type'] = match.group(1)

|

||||

cfg_item['prompt'] = match.group(3)

|

||||

cfg_item['range'] = None

|

||||

if cfg_item['type'] == 'Combo':

|

||||

cfg_item['option'] = option_dict[match.group(4)[1:]]

|

||||

elif cfg_item['type'] == 'EditNum':

|

||||

cfg_item['option'] = match.group(4)

|

||||

match = re.match(r'\s+ Help\s"(.*?)"$', line)

|

||||

if match:

|

||||

cfg_item['help'] = match.group(1)

|

||||

|

||||

match = re.match(r'\s+"Valid\srange:\s(.*)"$', line)

|

||||

if match:

|

||||

parts = match.group(1).split()

|

||||

cfg_item['option'] = (

|

||||

(int(parts[0], 0), int(parts[2], 0), cfg_item['option']))

|

||||

|

||||

return cfg_list

|

||||

|

||||

@staticmethod

|

||||

def generate_dsc(option_list, dsc_file=None):

|

||||

dsc_lines = []

|

||||

header = '%s' % (__copyright_dsc__ % date.today().year)

|

||||

dsc_lines.extend(header.splitlines())

|

||||

|

||||

pages = []

|

||||

for cfg_item in option_list:

|

||||

if cfg_item['page'] and (cfg_item['page'] not in pages):

|

||||

pages.append(cfg_item['page'])

|

||||

|

||||

page_id = 0

|

||||

for page in pages:

|

||||

dsc_lines.append(' # !BSF PAGES:{PG%02X::"%s"}' % (page_id, page))

|

||||

page_id += 1

|

||||

dsc_lines.append('')

|

||||

|

||||

last_page = ''

|

||||

for option in option_list:

|

||||

dsc_lines.append('')

|

||||

default = option['value']

|

||||

pos = option['cname'].find('_')

|

||||

name = option['cname'][pos + 1:]

|

||||

|

||||

if option['find']:

|

||||

dsc_lines.append(' # !BSF FIND:{%s}' % option['find'])

|

||||

dsc_lines.append('')

|

||||

|

||||

if option['instance'] > 0:

|

||||

name = name + '_%s' % option['instance']

|

||||

|

||||

if option['embed']:

|

||||

dsc_lines.append(' # !HDR EMBED:{%s}' % option['embed'])

|

||||

|

||||

if option['type'] == 'Reserved':

|

||||

dsc_lines.append(' # !BSF NAME:{Reserved} TYPE:{Reserved}')

|

||||

if option['option'] == '$SKIP':

|

||||

dsc_lines.append(' # !BSF OPTION:{$SKIP}')

|

||||

else:

|

||||

prompt = option['prompt']

|

||||

|

||||

if last_page != option['page']:

|

||||

last_page = option['page']

|

||||

dsc_lines.append(' # !BSF PAGE:{PG%02X}' % (pages.index(option['page'])))

|

||||

|

||||

if option['type'] == 'Combo':

|

||||

dsc_lines.append(' # !BSF NAME:{%s} TYPE:{%s}' %

|

||||

(prompt, option['type']))

|

||||

ops = []

|

||||

for val, text in option['option']:

|

||||

ops.append('0x%x:%s' % (val, text))

|

||||

dsc_lines.append(' # !BSF OPTION:{%s}' % (', '.join(ops)))

|

||||

elif option['type'] == 'EditNum':

|

||||

cfg_len = option['length']

|

||||

if ',' in default and cfg_len > 8:

|

||||

dsc_lines.append(' # !BSF NAME:{%s} TYPE:{Table}' % (prompt))

|

||||

if cfg_len > 16:

|

||||

cfg_len = 16

|

||||

ops = []

|

||||

for i in range(cfg_len):

|

||||

ops.append('%X:1:HEX' % i)

|

||||

dsc_lines.append(' # !BSF OPTION:{%s}' % (', '.join(ops)))

|

||||

else:

|

||||

dsc_lines.append(

|

||||

' # !BSF NAME:{%s} TYPE:{%s, %s,(0x%X, 0x%X)}' %

|

||||

(prompt, option['type'], option['option'][2],

|

||||

option['option'][0], option['option'][1]))

|

||||

dsc_lines.append(' # !BSF HELP:{%s}' % option['help'])

|

||||

|

||||

if ',' in default:

|

||||

default = '{%s}' % default

|

||||

dsc_lines.append(' gCfgData.%-30s | * | 0x%04X | %s' %

|

||||

(name, option['length'], default))

|

||||

|

||||

if dsc_file:

|

||||

fd = open(dsc_file, 'w')

|

||||

fd.write('\n'.join(dsc_lines))

|

||||

fd.close()

|

||||

|

||||

return dsc_lines

|

||||

|

||||

|

||||

class CFspDsc2Yaml():

|

||||

|

||||

def __init__(self):

|

||||

self._Hdr_key_list = ['EMBED', 'STRUCT']

|

||||

self._Bsf_key_list = ['NAME', 'HELP', 'TYPE', 'PAGE', 'PAGES', 'OPTION',

|

||||

'CONDITION', 'ORDER', 'MARKER', 'SUBT', 'FIELD', 'FIND']

|

||||

self._Bsf_key_list = ['NAME', 'HELP', 'TYPE', 'PAGE', 'PAGES',

|

||||

'OPTION', 'CONDITION', 'ORDER', 'MARKER',

|

||||

'SUBT', 'FIELD', 'FIND']

|

||||

self.gen_cfg_data = None

|

||||

self.cfg_reg_exp = re.compile(r"^([_a-zA-Z0-9$\(\)]+)\s*\|\s*(0x[0-9A-F]+|\*)\s*\|"

|

||||

+ r"\s*(\d+|0x[0-9a-fA-F]+)\s*\|\s*(.+)")

|

||||

self.bsf_reg_exp = re.compile(r"(%s):{(.+?)}(?:$|\s+)" % '|'.join(self._Bsf_key_list))

|

||||

self.hdr_reg_exp = re.compile(r"(%s):{(.+?)}" % '|'.join(self._Hdr_key_list))

|

||||

self.cfg_reg_exp = re.compile(

|

||||

"^([_a-zA-Z0-9$\\(\\)]+)\\s*\\|\\s*(0x[0-9A-F]+|\\*)"

|

||||

"\\s*\\|\\s*(\\d+|0x[0-9a-fA-F]+)\\s*\\|\\s*(.+)")

|

||||

self.bsf_reg_exp = re.compile("(%s):{(.+?)}(?:$|\\s+)"

|

||||

% '|'.join(self._Bsf_key_list))

|

||||

self.hdr_reg_exp = re.compile("(%s):{(.+?)}"

|

||||

% '|'.join(self._Hdr_key_list))

|

||||

self.prefix = ''

|

||||

self.unused_idx = 0

|

||||

self.offset = 0

|

||||

@ -290,15 +51,15 @@ class CFspDsc2Yaml():

|

||||

"""

|

||||

Load and parse a DSC CFGDATA file.

|

||||

"""

|

||||

gen_cfg_data = CGenCfgOpt('FSP')

|

||||

gen_cfg_data = CGenCfgData('FSP')

|

||||

if file_name.endswith('.dsc'):

|

||||

# if gen_cfg_data.ParseDscFileYaml(file_name, '') != 0:

|

||||

if gen_cfg_data.ParseDscFile(file_name, '') != 0:

|

||||

if gen_cfg_data.ParseDscFile(file_name) != 0:

|

||||

raise Exception('DSC file parsing error !')

|

||||

if gen_cfg_data.CreateVarDict() != 0:

|

||||

raise Exception('DSC variable creation error !')

|

||||

else:

|

||||

raise Exception('Unsupported file "%s" !' % file_name)

|

||||

gen_cfg_data.UpdateDefaultValue()

|

||||

self.gen_cfg_data = gen_cfg_data

|

||||

|

||||

def print_dsc_line(self):

|

||||

@ -312,14 +73,15 @@ class CFspDsc2Yaml():

|

||||

"""

|

||||

Format a CFGDATA item into YAML format.

|

||||

"""

|

||||

if(not text.startswith('!expand')) and (': ' in text):

|

||||

if (not text.startswith('!expand')) and (': ' in text):

|

||||

tgt = ':' if field == 'option' else '- '

|

||||

text = text.replace(': ', tgt)

|

||||

lines = text.splitlines()

|

||||

if len(lines) == 1 and field != 'help':

|

||||

return text

|

||||

else:

|

||||

return '>\n ' + '\n '.join([indent + i.lstrip() for i in lines])

|

||||

return '>\n ' + '\n '.join(

|

||||

[indent + i.lstrip() for i in lines])

|

||||

|

||||

def reformat_pages(self, val):

|

||||

# Convert XXX:YYY into XXX::YYY format for page definition

|

||||

@ -355,14 +117,16 @@ class CFspDsc2Yaml():

|

||||

cfg['page'] = self.reformat_pages(cfg['page'])

|

||||

|

||||

if 'struct' in cfg:

|

||||

cfg['value'] = self.reformat_struct_value(cfg['struct'], cfg['value'])

|

||||

cfg['value'] = self.reformat_struct_value(

|

||||

cfg['struct'], cfg['value'])

|

||||

|

||||

def parse_dsc_line(self, dsc_line, config_dict, init_dict, include):

|

||||

"""

|

||||

Parse a line in DSC and update the config dictionary accordingly.

|

||||

"""

|

||||

init_dict.clear()

|

||||

match = re.match(r'g(CfgData|\w+FspPkgTokenSpaceGuid)\.(.+)', dsc_line)

|

||||

match = re.match('g(CfgData|\\w+FspPkgTokenSpaceGuid)\\.(.+)',

|

||||

dsc_line)

|

||||

if match:

|

||||

match = self.cfg_reg_exp.match(match.group(2))

|

||||

if not match:

|

||||

@ -385,7 +149,7 @@ class CFspDsc2Yaml():

|

||||

self.offset = offset + int(length, 0)

|

||||

return True

|

||||

|

||||

match = re.match(r"^\s*#\s+!([<>])\s+include\s+(.+)", dsc_line)

|

||||

match = re.match("^\\s*#\\s+!([<>])\\s+include\\s+(.+)", dsc_line)

|

||||

if match and len(config_dict) == 0:

|

||||

# !include should not be inside a config field

|

||||

# if so, do not convert include into YAML

|

||||

@ -398,7 +162,7 @@ class CFspDsc2Yaml():

|

||||

config_dict['include'] = ''

|

||||

return True

|

||||

|

||||

match = re.match(r"^\s*#\s+(!BSF|!HDR)\s+(.+)", dsc_line)

|

||||

match = re.match("^\\s*#\\s+(!BSF|!HDR)\\s+(.+)", dsc_line)

|

||||

if not match:

|

||||

return False

|

||||

|

||||

@ -434,16 +198,19 @@ class CFspDsc2Yaml():

|

||||

tmp_name = parts[0][:-5]

|

||||

if tmp_name == 'CFGHDR':

|

||||

cfg_tag = '_$FFF_'

|

||||

sval = '!expand { %s_TMPL : [ ' % tmp_name + '%s, %s, ' % (parts[1], cfg_tag) \

|

||||

+ ', '.join(parts[2:]) + ' ] }'

|

||||

sval = '!expand { %s_TMPL : [ ' % \

|

||||

tmp_name + '%s, %s, ' % (parts[1], cfg_tag) + \

|

||||

', '.join(parts[2:]) + ' ] }'

|

||||

else:

|

||||

sval = '!expand { %s_TMPL : [ ' % tmp_name + ', '.join(parts[1:]) + ' ] }'

|

||||

sval = '!expand { %s_TMPL : [ ' % \

|

||||

tmp_name + ', '.join(parts[1:]) + ' ] }'

|

||||

config_dict.clear()

|

||||

config_dict['cname'] = tmp_name

|

||||

config_dict['expand'] = sval

|

||||

return True

|

||||

else:

|

||||

if key in ['name', 'help', 'option'] and val.startswith('+'):

|

||||

if key in ['name', 'help', 'option'] and \

|

||||

val.startswith('+'):

|

||||

val = config_dict[key] + '\n' + val[1:]

|

||||

if val.strip() == '':

|

||||

val = "''"

|

||||

@ -493,21 +260,23 @@ class CFspDsc2Yaml():

|

||||

include_file = ['.']

|

||||

|

||||

for line in lines:

|

||||

match = re.match(r"^\s*#\s+!([<>])\s+include\s+(.+)", line)

|

||||

match = re.match("^\\s*#\\s+!([<>])\\s+include\\s+(.+)", line)

|

||||

if match:

|

||||

if match.group(1) == '<':

|

||||

include_file.append(match.group(2))

|

||||

else:

|

||||

include_file.pop()

|

||||

|

||||

match = re.match(r"^\s*#\s+(!BSF)\s+DEFT:{(.+?):(START|END)}", line)

|

||||

match = re.match(

|

||||

"^\\s*#\\s+(!BSF)\\s+DEFT:{(.+?):(START|END)}", line)

|

||||

if match:

|

||||

if match.group(3) == 'START' and not template_name:

|

||||

template_name = match.group(2).strip()

|

||||

temp_file_dict[template_name] = list(include_file)

|

||||

bsf_temp_dict[template_name] = []

|

||||

if match.group(3) == 'END' and (template_name == match.group(2).strip()) \

|

||||

and template_name:

|

||||

if match.group(3) == 'END' and \

|

||||

(template_name == match.group(2).strip()) and \

|

||||

template_name:

|

||||

template_name = ''

|

||||

else:

|

||||

if template_name:

|

||||

@ -531,12 +300,14 @@ class CFspDsc2Yaml():

|

||||

init_dict.clear()

|

||||

padding_dict = {}

|

||||

cfgs.append(padding_dict)

|

||||

padding_dict['cname'] = 'UnusedUpdSpace%d' % self.unused_idx

|

||||

padding_dict['cname'] = 'UnusedUpdSpace%d' % \

|

||||

self.unused_idx

|

||||

padding_dict['length'] = '0x%x' % num

|

||||

padding_dict['value'] = '{ 0 }'

|

||||

self.unused_idx += 1

|

||||

|

||||

if cfgs and cfgs[-1]['cname'][0] != '@' and config_dict['cname'][0] == '@':

|

||||

if cfgs and cfgs[-1]['cname'][0] != '@' and \

|

||||

config_dict['cname'][0] == '@':

|

||||

# it is a bit field, mark the previous one as virtual

|

||||

cname = cfgs[-1]['cname']

|

||||

new_cfg = dict(cfgs[-1])

|

||||

@ -545,7 +316,8 @@ class CFspDsc2Yaml():

|

||||

cfgs[-1]['cname'] = cname

|

||||

cfgs.append(new_cfg)

|

||||

|

||||

if cfgs and cfgs[-1]['cname'] == 'CFGHDR' and config_dict['cname'][0] == '<':

|

||||

if cfgs and cfgs[-1]['cname'] == 'CFGHDR' and \

|

||||

config_dict['cname'][0] == '<':

|

||||

# swap CfgHeader and the CFG_DATA order

|

||||

if ':' in config_dict['cname']:

|

||||

# replace the real TAG for CFG_DATA

|

||||

@ -661,7 +433,7 @@ class CFspDsc2Yaml():

|

||||

lines = []

|

||||

for each in self.gen_cfg_data._MacroDict:

|

||||

key, value = self.variable_fixup(each)

|

||||

lines.append('%-30s : %s' % (key, value))

|

||||

lines.append('%-30s : %s' % (key, value))

|

||||

return lines

|

||||

|

||||

def output_template(self):

|

||||

@ -671,7 +443,8 @@ class CFspDsc2Yaml():

|

||||

self.offset = 0

|

||||

self.base_offset = 0

|

||||

start, end = self.get_section_range('PcdsDynamicVpd.Tmp')

|

||||

bsf_temp_dict, temp_file_dict = self.process_template_lines(self.gen_cfg_data._DscLines[start:end])

|

||||

bsf_temp_dict, temp_file_dict = self.process_template_lines(

|

||||

self.gen_cfg_data._DscLines[start:end])

|

||||

template_dict = dict()

|

||||

lines = []

|

||||

file_lines = {}

|

||||

@ -679,15 +452,18 @@ class CFspDsc2Yaml():

|

||||

file_lines[last_file] = []

|

||||

|

||||

for tmp_name in temp_file_dict:

|

||||

temp_file_dict[tmp_name][-1] = self.normalize_file_name(temp_file_dict[tmp_name][-1], True)

|

||||

temp_file_dict[tmp_name][-1] = self.normalize_file_name(

|

||||

temp_file_dict[tmp_name][-1], True)

|

||||

if len(temp_file_dict[tmp_name]) > 1:

|

||||

temp_file_dict[tmp_name][-2] = self.normalize_file_name(temp_file_dict[tmp_name][-2], True)

|

||||

temp_file_dict[tmp_name][-2] = self.normalize_file_name(

|

||||

temp_file_dict[tmp_name][-2], True)

|

||||

|

||||

for tmp_name in bsf_temp_dict:

|

||||

file = temp_file_dict[tmp_name][-1]

|

||||

if last_file != file and len(temp_file_dict[tmp_name]) > 1:

|

||||

inc_file = temp_file_dict[tmp_name][-2]

|

||||

file_lines[inc_file].extend(['', '- !include %s' % temp_file_dict[tmp_name][-1], ''])

|

||||

file_lines[inc_file].extend(

|

||||

['', '- !include %s' % temp_file_dict[tmp_name][-1], ''])

|

||||

last_file = file

|

||||

if file not in file_lines:

|

||||

file_lines[file] = []

|

||||

@ -708,7 +484,8 @@ class CFspDsc2Yaml():

|

||||

self.offset = 0

|

||||

self.base_offset = 0

|

||||

start, end = self.get_section_range('PcdsDynamicVpd.Upd')

|

||||

cfgs = self.process_option_lines(self.gen_cfg_data._DscLines[start:end])

|

||||

cfgs = self.process_option_lines(

|

||||

self.gen_cfg_data._DscLines[start:end])

|

||||

self.config_fixup(cfgs)

|

||||

file_lines = self.output_dict(cfgs, True)

|

||||

return file_lines

|

||||

@ -721,13 +498,17 @@ class CFspDsc2Yaml():

|

||||

level = 0

|

||||

file = '.'

|

||||

for each in cfgs:

|

||||

if 'length' in each and int(each['length'], 0) == 0:

|

||||

continue

|

||||

if 'length' in each:

|

||||

if not each['length'].endswith('b') and int(each['length'],

|

||||

0) == 0:

|

||||

continue

|

||||

|

||||

if 'include' in each:

|

||||

if each['include']:

|

||||

each['include'] = self.normalize_file_name(each['include'])

|

||||

file_lines[file].extend(['', '- !include %s' % each['include'], ''])

|

||||

each['include'] = self.normalize_file_name(

|

||||

each['include'])

|

||||

file_lines[file].extend(

|

||||

['', '- !include %s' % each['include'], ''])

|

||||

file = each['include']

|

||||

else:

|

||||

file = '.'

|

||||

@ -766,7 +547,8 @@ class CFspDsc2Yaml():

|

||||

for field in each:

|

||||

if field in ['cname', 'expand', 'include']:

|

||||

continue

|

||||

value_str = self.format_value(field, each[field], padding + ' ' * 16)

|

||||

value_str = self.format_value(

|

||||

field, each[field], padding + ' ' * 16)

|

||||

full_line = ' %s %-12s : %s' % (padding, field, value_str)

|

||||

lines.extend(full_line.splitlines())

|

||||

|

||||

@ -802,11 +584,13 @@ def dsc_to_yaml(dsc_file, yaml_file):

|

||||

if file == '.':

|

||||

cfgs[cfg] = lines

|

||||

else:

|

||||

if('/' in file or '\\' in file):

|

||||

if ('/' in file or '\\' in file):

|

||||

continue

|

||||

file = os.path.basename(file)

|

||||

fo = open(os.path.join(file), 'w')

|

||||

fo.write(__copyright_tmp__ % (cfg, date.today().year) + '\n\n')

|

||||

out_dir = os.path.dirname(file)

|

||||

fo = open(os.path.join(out_dir, file), 'w')

|

||||

fo.write(__copyright_tmp__ % (

|

||||

cfg, date.today().year) + '\n\n')

|

||||

for line in lines:

|

||||

fo.write(line + '\n')

|

||||

fo.close()

|

||||

@ -821,13 +605,11 @@ def dsc_to_yaml(dsc_file, yaml_file):

|

||||

|

||||

fo.write('\n\ntemplate:\n')

|

||||

for line in cfgs['Template']:

|

||||

if line != '':

|

||||

fo.write(' ' + line + '\n')

|

||||

fo.write(' ' + line + '\n')

|

||||

|

||||

fo.write('\n\nconfigs:\n')

|

||||

for line in cfgs['Option']:

|

||||

if line != '':

|

||||

fo.write(' ' + line + '\n')

|

||||

fo.write(' ' + line + '\n')

|

||||

|

||||

fo.close()

|

||||

|

||||

@ -864,7 +646,8 @@ def main():

|

||||

bsf_file = sys.argv[1]

|

||||

yaml_file = sys.argv[2]

|

||||

if os.path.isdir(yaml_file):

|

||||

yaml_file = os.path.join(yaml_file, get_fsp_name_from_path(bsf_file) + '.yaml')

|

||||

yaml_file = os.path.join(

|

||||

yaml_file, get_fsp_name_from_path(bsf_file) + '.yaml')

|

||||

|

||||

if bsf_file.endswith('.dsc'):

|

||||

dsc_file = bsf_file

|

||||

|

||||

2637

IntelFsp2Pkg/Tools/FspGenCfgData.py

Normal file

2637

IntelFsp2Pkg/Tools/FspGenCfgData.py

Normal file

File diff suppressed because it is too large

Load Diff

45

IntelFsp2Pkg/Tools/UserManuals/ConfigEditorUserManual.md

Normal file

45

IntelFsp2Pkg/Tools/UserManuals/ConfigEditorUserManual.md

Normal file

@ -0,0 +1,45 @@

|

||||

#Name

|

||||

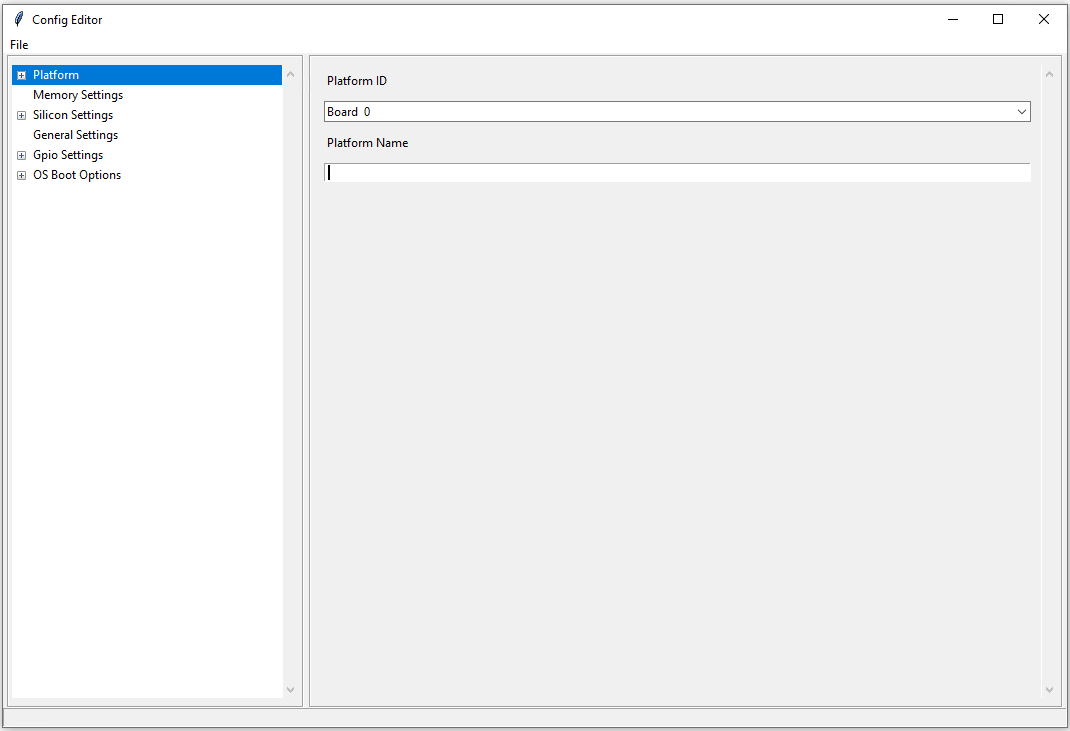

**ConfigEditor.py** is a python script with a GUI interface that can support changing configuration settings directly from the interface without having to modify the source.

|

||||

|

||||

#Description

|

||||

This is a GUI interface that can be used by users who would like to change configuration settings directly from the interface without having to modify the SBL source.

|

||||

This tool depends on Python GUI tool kit Tkinter. It runs on both Windows and Linux.

|

||||

The user needs to load the YAML file along with DLT file for a specific board into the ConfigEditor, change the desired configuration values. Finally, generate a new configuration delta file or a config binary blob for the newly changed values to take effect. These will be the inputs to the merge tool or the stitch tool so that new config changes can be merged and stitched into the final configuration blob.

|

||||

|

||||

|

||||

It supports the following options:

|

||||

|

||||

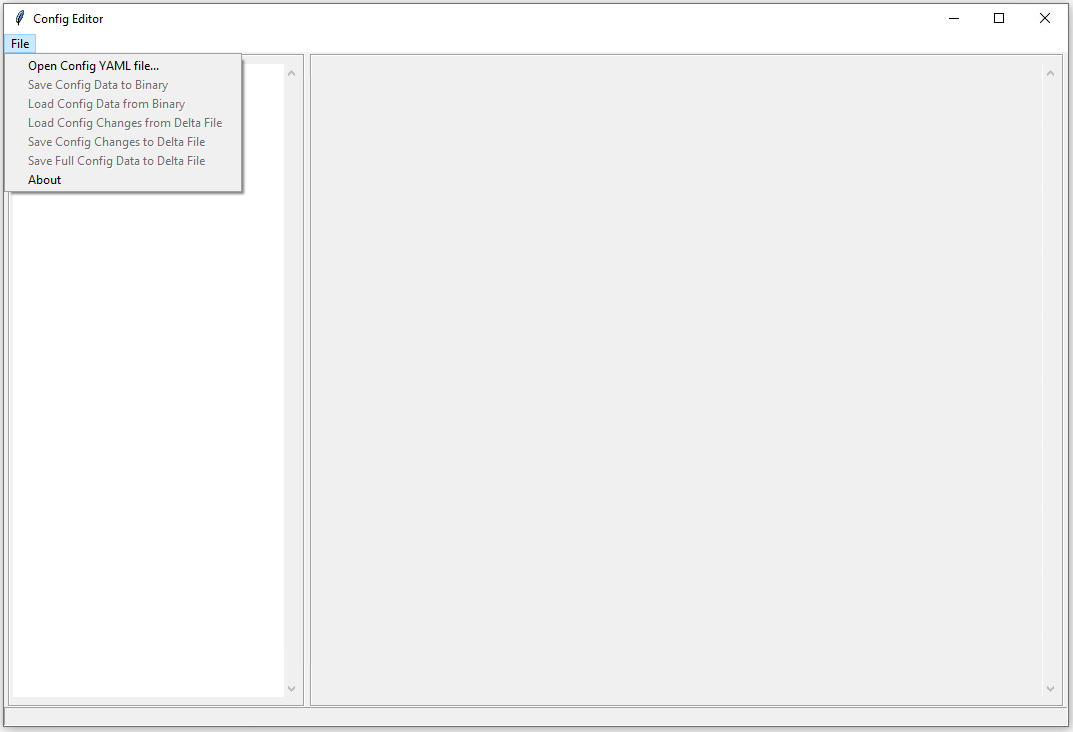

## 1. Open Config YAML file

|

||||

This option loads the YAML file for a FSP UPD into the ConfigEditor to change the desired configuration values.

|

||||

|

||||

#####Example:

|

||||

```

|

||||

|

||||

|

||||

|

||||

```

|

||||

|

||||

## 2. Open Config BSF file

|

||||

This option loads the BSF file for a FSP UPD into the ConfigEditor to change the desired configuration values. It works as a similar fashion with Binary Configuration Tool (BCT)

|

||||

|

||||

## 3. Show Binary Information

|

||||

This option loads configuration data from FD file and displays it in the ConfigEditor.

|

||||

|

||||

## 4. Save Config Data to Binary

|

||||

This option generates a config binary blob for the newly changed values to take effect.

|

||||

|

||||

## 5. Load Config Data from Binary

|

||||

This option reloads changed configuration from BIN file into the ConfigEditor.

|

||||

|

||||

## 6. Load Config Changes from Delta File

|

||||

This option loads the changed configuration values from Delta file into the ConfigEditor.

|

||||

|

||||

## 7. Save Config Changes to Delta File

|

||||

This option generates a new configuration delta file for the newly changed values to take effect.

|

||||

|

||||

## 8. Save Full Config Data to Delta File

|

||||

This option saves all the changed configuration values into a Delta file.

|

||||

|

||||

## Running Configuration Editor:

|

||||

|

||||

**python ConfigEditor.py**

|

||||

Loading…

x

Reference in New Issue

Block a user